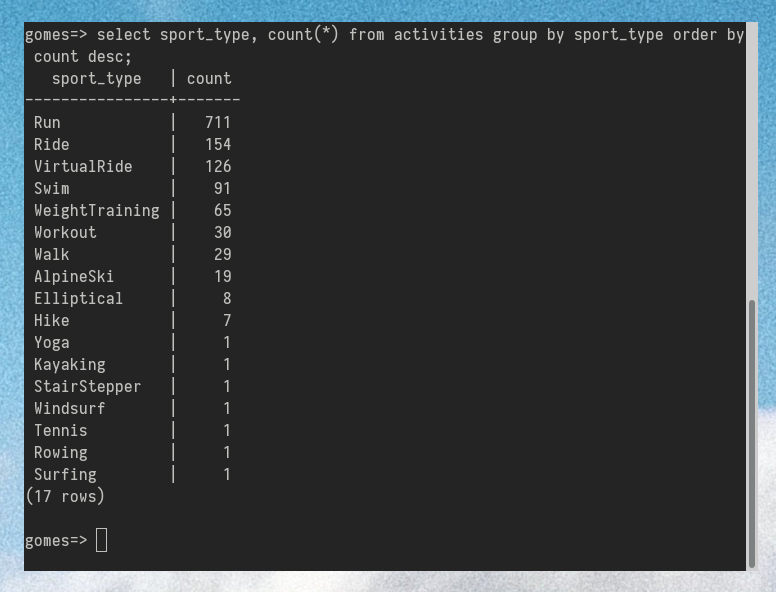

Loading Strava Activities Into a SQL Database and Using English to Ask Questions

I’m a Strava user and log all of my workouts there. Recently, I’ve been wondering if I could find a way to ask questions about my Strava activities in plain English. Here’s some examples:

- “How long is my usual run?”

- “How frequently do I run per week?”

- “What time of the day to I tend to run at, and does that change during the year?”

- “Is there any correlation between my running performance and the weather?”

At the same time, I’d like all of my Strava data to be accessible with good old SQL. That'll be useful as well.

So, as fun Saturday hackathon, I put together a “strava-postgres” repository on GitHub that allows anybody to achieve this.

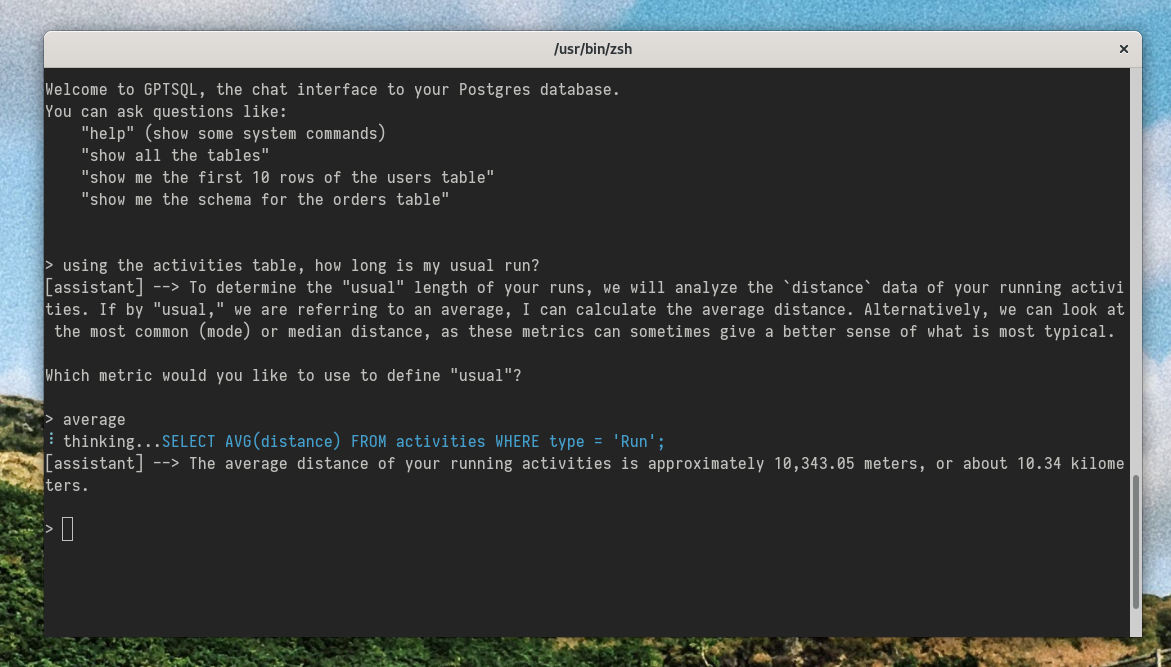

(I used an awesome project called “gptsql” for the second screenshot. More details on this below.)

Now, I’ll briefly explain how I got everything set up.

To load data from Strava, I am simply using their REST API. More specifically, I used node-strava-v3 which is a Node.js wrapper around the API (which is slightly nicer than the Swagger-generated bindings). First, I had to create a Strava Application, and then I created a script that goes through the OAuth flow using the browser:

$ bun get-access-token.ts

Go to URL <https://www.strava.com/oauth/authorize?client_id=123456&redirect_uri=http%3A%2F%2Flocalhost%2Fexchange_token&response_type=code&scope=activity%3Awrite%2Cactivity%3Aread%2Cactivity%3Aread_all> and authorize application

Once you have authorized, you will be redirected to a 'localhost' address (don't worry if you see a 'This site can’t be reached' message)

✔ Copy the whole URL of the page from the browser and paste it here : … <http://localhost/exchange_token?state=&code=3ef024236b8c48891a23d318b9256fdf571210e8&scope=read,activity:write,activity:read,activity:read_all>

Successfully retrieved access token: <redacted-access-token>

With the access token that this script prints out, we now have access to the Strava API. You can find the full API reference here.

To load data into Postgres, I am simply using node-postgres. The tricky bit was setting up the schema. To avoid writing the 52-columns CREATE TABLE statement myself, I took a sample JSON activity, and used a website that converts JSON to Postgres DDL. Fortunately, it also generated the INSERT statement for me:

INSERT INTO activities (

"resource_state",

"athlete.id",

"athlete.resource_state",

"name",

"distance",

"moving_time",

"elapsed_time",

"total_elevation_gain",

"type",

"sport_type",

"workout_type",

"id",

"start_date",

"start_date_local",

"timezone",

"utc_offset",

"location_city",

"location_state",

"location_country",

"achievement_count",

"kudos_count",

"comment_count",

"athlete_count",

"photo_count",

"map.id",

"map.summary_polyline",

"map.resource_state",

"trainer",

"commute",

"manual",

"private",

"visibility",

"flagged",

"gear_id",

"start_latlng",

"end_latlng",

"average_speed",

"max_speed",

"average_cadence",

"average_temp",

"has_heartrate",

"average_heartrate",

"max_heartrate",

"heartrate_opt_out",

"display_hide_heartrate_option",

"elev_high",

"elev_low",

"upload_id",

"upload_id_str",

"external_id",

"from_accepted_tag",

"pr_count",

"total_photo_count",

"has_kudoed"

) VALUES (...);One more thing to keep in mind is that the API only returns pages of at most 200 activities. So, my code has to traverse each activity on each page and INSERT/UPDATE in Postgres accordingly. The script supports a regular mode, and an “upsert” mode:

# Only loads new activities into Postgres.

$ bun load-activities <access-token>

# Update data about activities that are already in the database.

$ bun load-activities --update-existing <access-token>

To use English to talk to the database, I found a neat project called “gptsql”. The usage is really simple:

- Run

gptsqlin a shell. - It asks you for your Postgres connection details (hostname, username, password).

- It asks you for your OpenAI API key.

- It asks you which OpenAI model you’d like to use (GPT 3.5 or GPT 4).

- Done! You’re now in a “English shell” (as opposed to a “SQL Shell”) where you can talk to your data.

I was indeed able to ask my database some questions in plain English, such as “how long is my average run, in minutes?”:

> how long is my average run, in minutes. use the activities table.

⠙ thinking...SELECT AVG(elapsed_time) / 60 as average_duration_minutes FROM activities WHERE type = 'Run';

[assistant] --> It appears that there was an error due to an unexpected closure of the SSL connection during the database query, which is a technical issue. Let me reattempt the query.

[assistant] --> The average distance of your running activities is approximately 10,343.05 meters, or about 10.34 kilometers. However, to convert this to time in minutes, we need to use the average pace or speed at which you run, which is not provided by the distance alone.

To calculate the average duration of the running activities in minutes, we need to query for the average of the time duration (not distance) for running activities. This could be represented by the `elapsed_time` or another time-related column.

Let's perform that query now.

[assistant] --> The average duration of your running activities is approximately 3510.6 seconds.

To convert this to minutes, we divide by 60:

\\( \\text{Average duration in minutes} = \\frac{3510.6 \\text{ seconds}}{60} \\)

Let's calculate the exact value.

[assistant] --> The average duration of your running activities is approximately 58.51 minutes.

[assistant] --> The average duration of your running activities, calculated directly from the `activities` table, is approximately 58.51 minutes.

>

The AI needs quite a bit of hinting, and some back and forth discussion in order to answer more complex queries. However, Scott Persinger deserves a ton of praise for getting this out there since it’s really super useful. Thank you!

As for me, now that I have this data and I can query it in English or SQL, I’m on the lookout for interesting things to do with it. Furthermore, feel free to submit improvements to my repository on GitHub. I even have a list of TODO items that others could help with.

Feel free to reach out on Twitter!