Async Queue – One of My Favorite Programming Interviews (Can AI Break It?)

For the past 7+ years, I've been conducting a programming interview that has been a true personal favorite of mine. It was passed down to me from good friends (Jeremy Kaplan and Carl Sverre, and it was the latter whom I believe invented it). This interview has probably been given by us between 500-1000 times across different companies, and upon googling for "async queue interview", I see tons of results. So, it's probably fine for me to blog about it.

My main goal with this blog post is to discuss why I like the interview so much, and I will also get into how AI does at this interview today (obviously knowning that AI advancing so quickly that this will probably be obsolete soon).

The beginning of the interview

The story goes as follows, or so I remember... We are working on a client, like a web app for instance. And our client talks to a server. But that server is faulty!! If it has to handle multiple requests at once, it starts to break down. So, we decide to make our server's life easier by trying to ensure, from the client, that it doesn't ever have to handle more than one request at once (at least from the same client, so we can assume this is a single-server per-client type of architecture).

Then, the main thing is that our client is single-threaded. This interview can be given in JavaScript (where candidates can sort of assume single-threadedness), or any other language (even just pseudo-code) is fine, but single-threadedness is an important part of how the interview is given.

So as it stands, our client is currently calling the following send function all over the codebase:

declare function send<P>(

payload: P,

callback: () => void

): void;This send function is a blackbox for us in the context of this exercise. We won't see its implementation nor should we care too much about it. All it does is send some payload to the server, and then call some callback once the server has finished processing this payload (whether the processing was successful or not).

So, we'd like to implement a new function that we can start using all over the codebase called sendOnce:

declare function sendOnce<P>(

payload: P,

callback: () => void

): void;This function should, somehow, guarantee that no more than a single request is being handled by the server (for the same client) at any given point in time.

Here's a naive, faulty implementation:

let requestQueue: Array<{ payload: unknown, callback: () => void }> = [];

function sendOnce<P>(payload: P, callback: () => void) {

function processNextRequest() {

if (requestQueue.length !== 0) {

const { payload, callback } = requestQueue.shift() as { payload: unknown, callback: () => void };

send(payload, () => {

callback();

processNextRequest();

});

}

}

if (requestQueue.length === 0) {

send(payload, () => {

callback();

processNextRequest();

})

} else {

requestQueue.push({ payload, callback });

}

}The bug in this implementation is that if sendOnce is called consecutively and the previous request hasn't finished yet, then we violate the "one request at a time" requirement. A proper implementation needs a requestQueue as well as a isProcessing flag:

let isProcessing = false;

let requestQueue = [];

function sendOnce(payload, callback) {

requestQueue.push({ payload, callback });

if (!isProcessing) {

processNextRequest();

}

}

function processNextRequest() {

if (requestQueue.length === 0) {

isProcessing = false;

return;

}

isProcessing = true;

const { payload, callback } = requestQueue.shift();

send(payload, () => {

callback();

processNextRequest();

});

}Now that we have the proper implementation, we can start to see why this interview is so interesting:

- Can candidates reason around tricky flag logic and write bug-free code? Can they read code and debug it in their head?

- Can candidates come up with the callback wrapping logic that is required to make this work?

- Will candidates get stuck on the single-threaded nature of JavaScript and try to write code that works in a multi-threaded environment? (i.e., multiple threads trying to read the

requestQueuearray)- This happens a lot since the single-threaded nature of JavaScript is not that well understood especially by less-experienced engineers. A lot of candidates start to write while loops iterating on the queue which will block the thread.

What I've described so far is really just the start of the interview. Let's get into what comes next!

Getting into the "meatier" parts of the interview

After the candidate has successfully implemented sendOnce, we can start to make the interview more interesting. The first thing we'd typically ask candidates to do is to add a third parameter to it so that it now has the following signature:

declare function sendOnce<P>(

payload: P,

callback: () => void,

minDelayMs: number

): void;The new argument should be used to let clients send requests to the server with a certain minimum delay (i.e., we don't want the server to begin processing our request for at least minDelay milliseconds after we fire sendOnce).

If you have any experience with JavaScript, the solution is fairly straightforward. We can simply wrap the entire sendOnce function in a setTimeout.

function sendOnce(payload, callback, minDelayMs = 0) {

setTimeout(() => {

requestQueue.push({ payload, callback });

if (!isProcessing) {

processNextRequest();

}

}, minDelayMs);

}For candidates without JavaScript experience or doing this interview in pseudo-code, you have to tell them that there's another function available to them now with the following signature:

declare function setTimeout(callback: () => void, delayMs: number): number;For more experienced candidates, you can include the minDelayMs requirement from the very beginning of the interview. This makes the problem more challenging since the candidate will be thinking about the queue at the same time as they're thinking about the minimum delay. Strong engineers, however, can break out the two problems and solve them at the same time. And if someone is struggling, you can always break it apart for them. Simply tell the candidate to ignore the minDelayMs for a bit, and then re-introduce this requirement later.

For this part of the interview, what I particularly like about it is that you're testing the candidate's ability to handle new requirements, and again you're testing how they can work in a single-threaded environment (a lot of people resort to some type of blocking sleep style function call to solve the delay part of this problem).

What comes after this?

After sendOnce is implemented, it's time to make the interview a lot more interesting. This is where you can start to distinguish less skilled software engineers from more skilled software engineers. You can do this by adding a bunch of new requirements to the problem:

- Implement

sendManywhich is asendOncethat should happen every X seconds - Implement a cancelation mechanism for

sendOncecalls- (let API design up to the candidate)

- Implement a way for requests to be retried, if failed

- Write tests!

- Implement a full blown

AsyncQueueclass with more methods that allow the clients to define some type of priority for different requests- (let API design up to the candidate)

These questions are really helpful to test a whole bunch of different skills. Can they evolve their code with new requirements in such a way where the code stays elegant? How do they approach writing tests? What types of edge cases are they covering in their tests?

Can AI crack this interview?

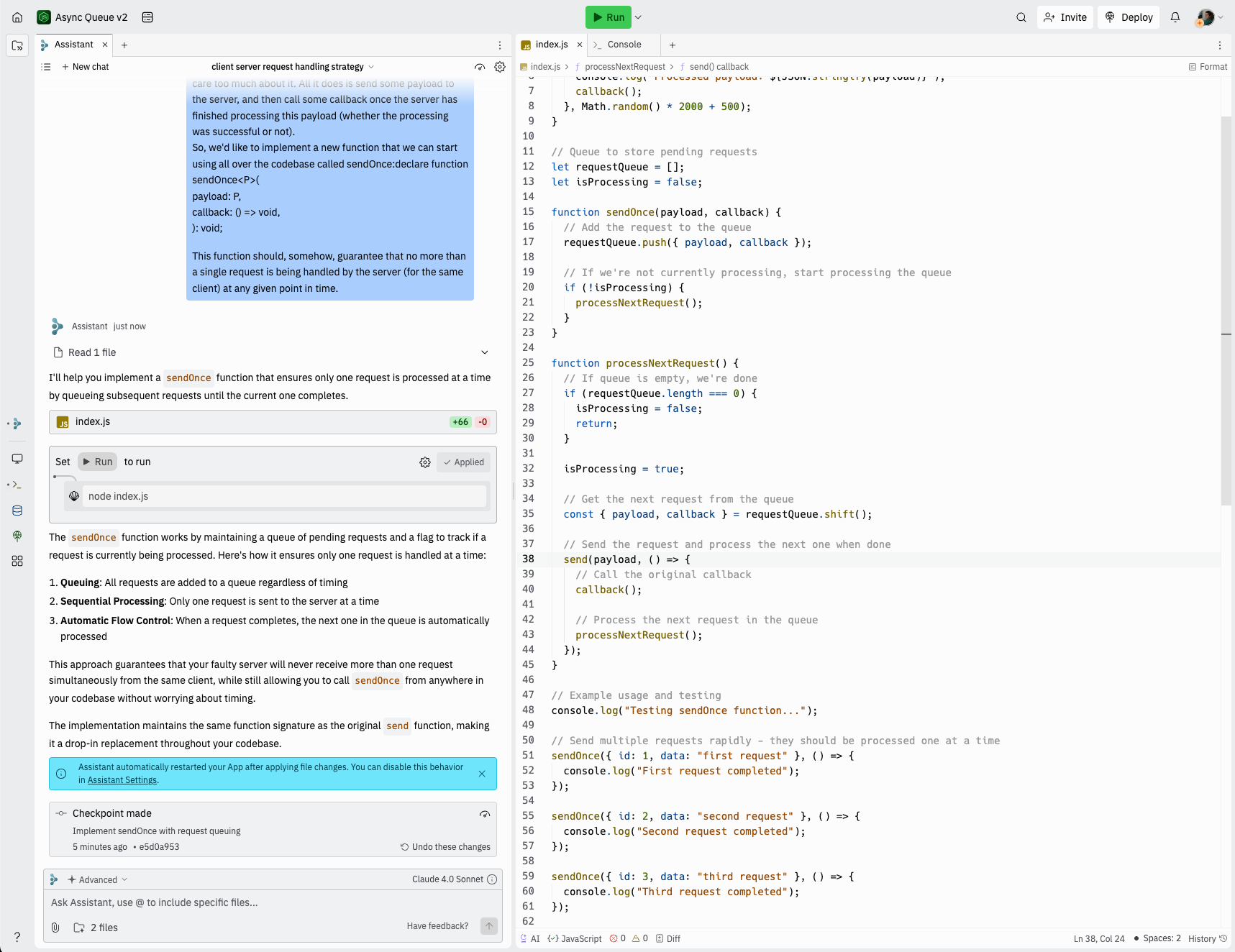

Absolutely, yes (with proper prompting, and some caveats). I gave the Replit Agent a try on this interview earlier today with Claude Sonnet 4.0 and it was able to do a very good job with sendOnce (even with the minimum delay argument).

However, the code was fairly convoluted and I had to prompt it a few more times to simplify it a bit more. For the more advanced requirements, it starts to write buggier code.

So, when giving this interview, should we let candidates use AI? Yes. (And I'd even recommend giving this interview with the Replit UI + Replit Agent)

The number of engineers who are not leveraging LLMs to write code is shrinking by the day. And with the exception of some fairly niche situations where LLMs are still somewhat useless (like low-level systems engineering), they are incredibly helpful. And that only makes me like this interview even more!

Because I explicitly tell candidates upfront that they can use AI to speed up their work, the exercise becomes more interesting. The very best candidates will use AI for auto-completion and other things, but quickly, and correctly, review all the code it generates. This is a good way to test how "AI-native" the candidate is. The more AI-native, the more quickly they should be able to handle each new set of requirements. And that is exactly what I'm looking for these days – engineers who can empower themselves and become faster with these new tools.

And especially for the section of the interview where candidates have to write tests, AI can be a very powerful assistant, but the prompts have to be well-structured and carefully written. Furthermore, all the AI-generated code must be properly reviewed.

I'd love to hear more about how others are adopting AI in their technical interviews.

Feel free to follow me on Twitter/X if you want!